I am almost new to this field. Still learning.

Introduction

In the field of engineering, frequently we need to do system identification (SI), i.e. to infer the mathematical model of a dynamical system based on a set of time series data. A classical problem is the SI of a linear system 1, Given input and output , how does one determine the system matrices ? Note that the system states are not directly observed in the time series data, i.e. they are hidden. Over the long history of SI, many successful methods and algorithms have been developed. Some model the hidden states explicitly and some do not. Some can handle nonlinear systems and some do not. I am not an expert in SI and not going to talk about these algorithms.

In the machine learning community, it appears that people tend to reorganize and reformulate some old concepts into some “new ideas”. No offense. Indeed this is sometimes useful. In Hinton2013, several modeling methods for sequential data are divided into two categories: memoryless models and memoryful models. The memoryless models have limited memory window and the hidden state cannot be used efficiently. One example is the autoregressive model, which predicts the next term in a sequence from a fixed number of previous terms. Another example is the feed-forward neural nets, which generalize autoregressive models by using one or more layers of non-linear hidden units. The memoryful models infer the hidden state distribution at the cost of higher computational burden. One example is the linear systems algorithms that can be viewed as generative models with real-valued hidden states that cannot be observed directly. Another example is the hidden Markov models (HMM), which have a discrete one-of-N hidden state. In HMM, transitions between states are stochastic and controlled by a transition matrix. The outputs produced by a state are stochastic.

The last example of the memoryful models is the recurrent neural network (RNN), the topic of this post. It updates the hidden state in a deterministic nonlinear way. Compared to the aformentioned models, the RNN has the following advantages:

- Distributed hidden state allows the efficient storage of information about the past.

- Non-linear dynamics allows the update of hidden state in complicated ways.

- No need to infer hidden state, whose evolution is purely deterministic.

- Parameters in the RNN, i.e. the weights, are shared, which makes the model compact.

Mathematical Models of RNN

Vanilla RNN

This section is based on this.

The RNN centers around the following equation that describes the evolution of the hidden state, where is an activation function with weights . The time steps and makes the formula recurrent. The function and may be shared between different layers/time steps.

A vanilla RNN is It looks very much like the linear system discussed above, except that it has a nonlinear state equation.

There are multiple types of RNNs: one-to-one, one-to-many, many-to-one, many-to-many, etc. In other words, the inputs and outputs are not necessarily nonzero. For example, an RNN is many-to-one when all of its inputs are assumed to be nonzero and only one output is expected (e.g. at the end) - this could be the case for a sequence classification problem.

Gradient Problem

There is an infamous issue with RNN: the gradient problem, which prevented the effective training of RNNs for a period of time.

The training of an RNN requires a proper definition of the error, or the scalar loss function , where time steps are assumed, and represent the ground truth.

The weights are computed by the gradient descent method, where the gradient is computed from the back propagation (BP) of the error. In other words, one needs to obtain the derivative of w.r.t. via the chain rule, where

Now, focus on the weights for , i.e. , which will be written as for simplicity in the following. There is a “double dependency” in the gradient : (1) itself depends on all the past states; (2) a past state depends on the states prior to that state, too. Mathematically, where where makes the vector a diagonal matrix.

The gradient problem comes from the product in . For each term of the product where and are the maximum singular values of and , respectively. For the product

When is a function like , would be close to zero as the input moves away from zero. As for , is probably either or . Therefore, a sufficiently large , i.e. time span of dependency, can cause two issues for the BP,

- Vanishing gradients: , making . In other words, the past states cannot be practically involved in BP.

- Exploding gradients: , making . In other words, the BP may experience a computational overflow.

Another way to see the gradient problem in the vanilla RNN is via the propagation of the variation/error of the hidden state . This way is somewhat hand-waving but more concise. The error of is, where is element-wise vector product and the variation associated with the input is ignored. The second term in the bracket represents the contribution of the error of the previous states to the error of the current state, For situations discussed in the previous paragraph, the factor can be (1) vanishing, so that almost does not contribute to ; (2) exploding, so that the contribution of overwhelms the contributions of the other states.

The issue of vanishing and exploding gradients does not mean the RNN model is impractical, but rather means that the gradient descent becomes increasingly inefficient when the temporal span of the dependencies increases.

There are some fixes to circumvent the gradient issue while retaining the vanilla RNN structure,

- For vanishing gradients, one can perform a proper initialization of the weights, and incorporate a more well-behaved activation function, such as the rectified linear unit (ReLU):

- For exploding gradient, one can do a clipping trick with a threshold for the gradient ,

Long Short Term Memory

A more systematic solution to the vanishing gradient problem is the Long Short Term Memory (LSTM) model (Hochreiter1997) 2. It introduces a “memory cell” that enables the storage and access of information over long periods of time. The state equation for LSTM is now significantly expanded from the vanilla RNN, where is the sigmoid function acting as a switch and is a constant offset vector.

In the LSTM model, the current hidden state is no longer directly related to the current input and the previous hidden state. Rather, it is now an output from the memory cell . The memory cell is essentially a weighted sum of the past memory and the current information. In other words, it determines: (1) whether to activate the information from past steps, and (2) whether to overwrite the memory with the information from the current step. Specifically, three new gates are introduced,

- Input gate: Scale input to cell (write)

- Output gate: Scale output from cell (read)

- Forget gate: Scale old cell values (reset)

The effect of the gates is better seen via the variation approach w.r.t. the hidden states, the memory cells, and the weights . Note that corresponds to in the vanilla version and will again be written as below for simplicity. Also note that,

The variations of and are, where is a condensed term containing several weights, including . Note that only appears in the errors in the memory cell. Now, propagates into but does not necessarily propagate into . Instead, may be directly passed to without being affected by , thus avoiding the products of and the gradient problems.

Gated Recurrent Unit

The gated recurrent unit (GRU) is another RNN variant Chung2015. It is similar to LSTM, but computationally more efficient, as there are less parameters and less complex structure.

Mathematically, GRU is represented as,

The GRU merges the cell state and hidden state, and thus replaces and the output gate is no longer needed. Next, the GRU combines the forget and input gates into a single “update gate” , which enables the inclusion of long-term behaviors in the RNN. Finally, a reset gate is added to control the contribution of the previous states to the current prediction.

While many alternative LSTM architectures have been proposed, in Greff2016, it was found that the original LSTM performs reasonably well on various datasets and none of the eight investigated modifications (including GRU) significantly improves performance. Furthermore, it was found that the forget gate and the output activation function as its most critical components.

Example

PyTorch: Windows with Anaconda

Among the popular machine learning frameworks, I chose PyTorch for its mild learning curve. I planed to try RNN on my Windows laptop, but it turns out PyTorch does not support Python 2 on Windows/Anaconda. So I did the following to install a minimal working PyTorch.

A Python 3 environment has to be created first by

After a lengthy installation, the environment is then deployed by

The command for installing PyTorch is found here. In my case where a GPU is not available, the PyTorch ecosystem is installed by,

The Python 3 installation might alter the default browser for the Jupyter Notebook. To reset the browser, first fo the following in the command line,

And next specify the path to the browser in the config file,

Note that %s has to be in the string.

Toy Problem

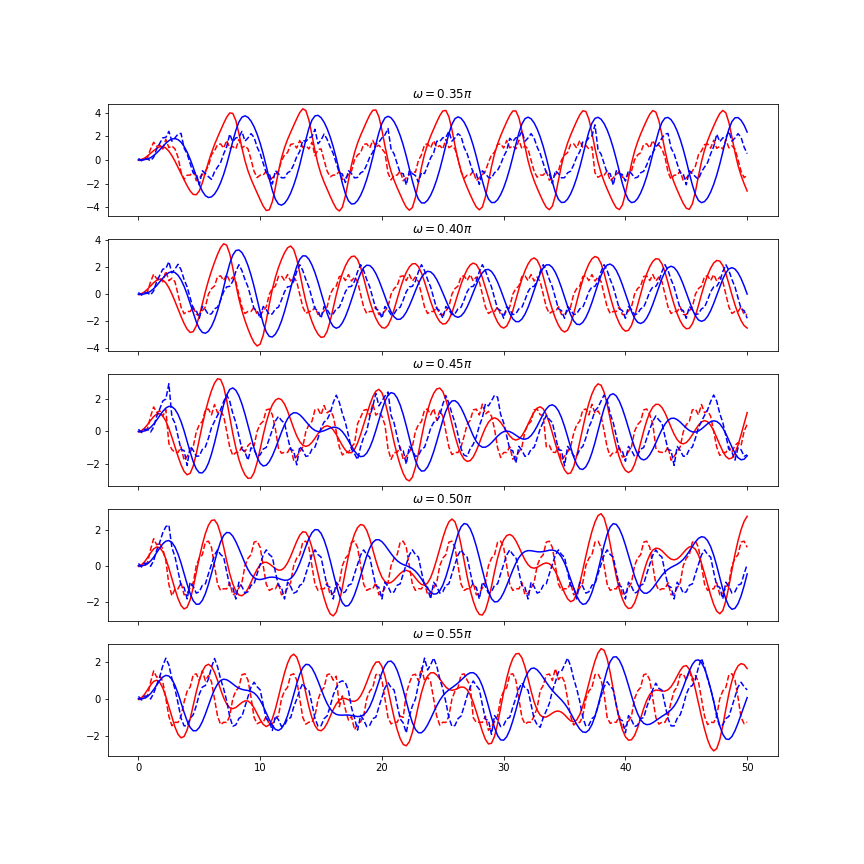

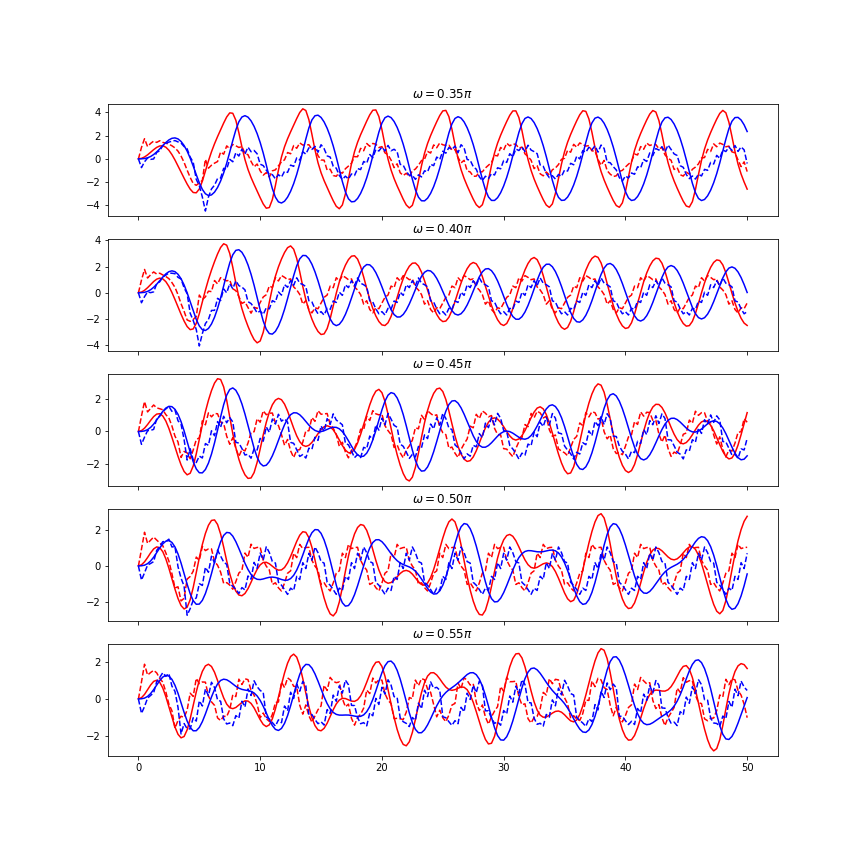

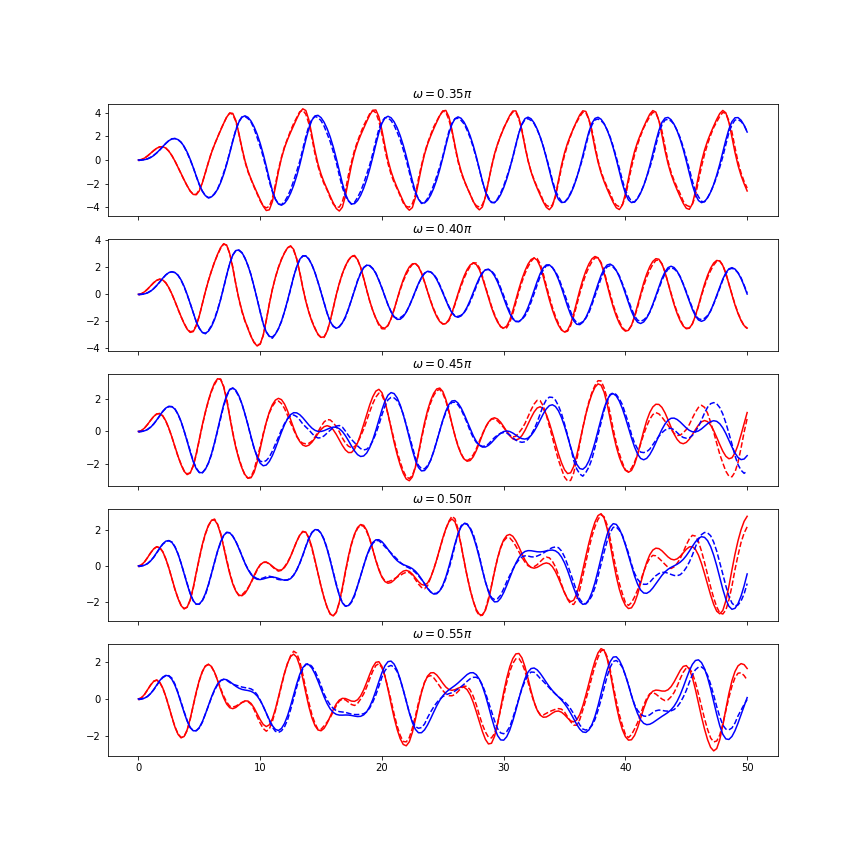

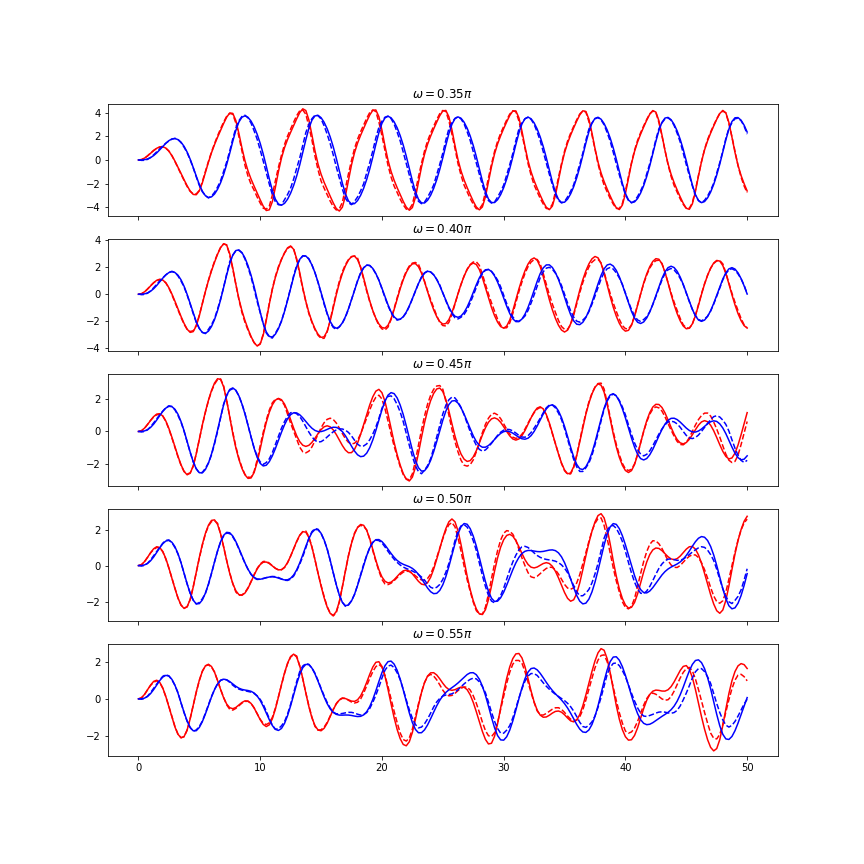

The dynamical system considered in this example is the forced Van der Pol (VdP) oscillator, where and are considered the states of this second-order ODE. The first term on the RHS is a nonlinear damping term that introduced rich dynamical properties to the oscillator. The third term on the RHS is considered the input to the system. In this study, the following parameters are used, Given the sinusoidal input associated with a certain value of , one can numerically integrate the system to obtain the response of the VdP oscillator. The goal of this exercise is to fit an RNN based on the VdP responses for several ’s, such that the RNN can reproduce the VdP responses for some other ’s.

The code implementation is adapted from here, and can be found from my github repo. Four types of RNNs are examined,

- Vanilla RNN with as activation function.

- Vanilla RNN with ReLU as activation function.

- LSTM RNN

- GRU RNN

All the RNNs have one hidden layer with 32 states and one linear output layer. The RNNs are trained via the MSE loss function using the Adam algorithm with a learning rate of 0.01 and 10000 epochs. The training data set includes the VdP responses for . The testing data sets includes the VdP responses for . In each training epoch, the data for gradient decent is a random segment (starts from beginning) of a VdP response randomly chosen from the training data set. A maximum of 120 time steps is used in the training. In other words, the last 80 time steps of the VdP response in the training data set are unseen by the RNN. Finally, the initial hidden states are set to be zero.

The results are shown in the figures below. The solid blue and red lines are the VdP responses found directly using numerical integration. The dashed lines are the RNN prediction. The vanilla RNNs do not do well in this problem. The failure probably can be explained the gradient vanishing problem that prevents the vanilla RNN to capture the long term periodic behavior. Both the LSTM and GRU do well. Note that the RNN predictions extrapolates well beyond the data used for training, as well as between the different values of ’s.

There are apprarently many tweaks and tunable parameters in the RNNs that can improve the prediction or generalize the model. For example,

- What are the optimal numbers of layers and hidden states for the RNN?

- What is the best way to utilize the training data set?

- How to take care of different initial conditions? Maybe via infering the proper initial hidden state in RNN?

- How to efficiently train the RNN for a larger parameter space that includes, e.g. ?

These might be done in a future post.